After a full day of talks, demos, and AMAs I just wanted to provide a little more color and detail about Open AI’s dev day 2025. I did not exactly know what to expect from this event but it felt like Open AI’s WWDC where a ton of new products were announced and demo’ed - glued together by talks and stage conversations from OpenAI leadership. I’ll just mention the ones that left the biggest impression of me:

- Agents SDK: To be quite frank here, my opinion of agents was that they are overrated - a solution looking for a problem. However, the new agents SDK and the accompanying web drag-and-drop UI together with powerful eval and deployment tooling is changing my mind a bit. This SDK will definitely make agents more accessible beyond the software engineering agent use-case. See the Agent Builder UI below:

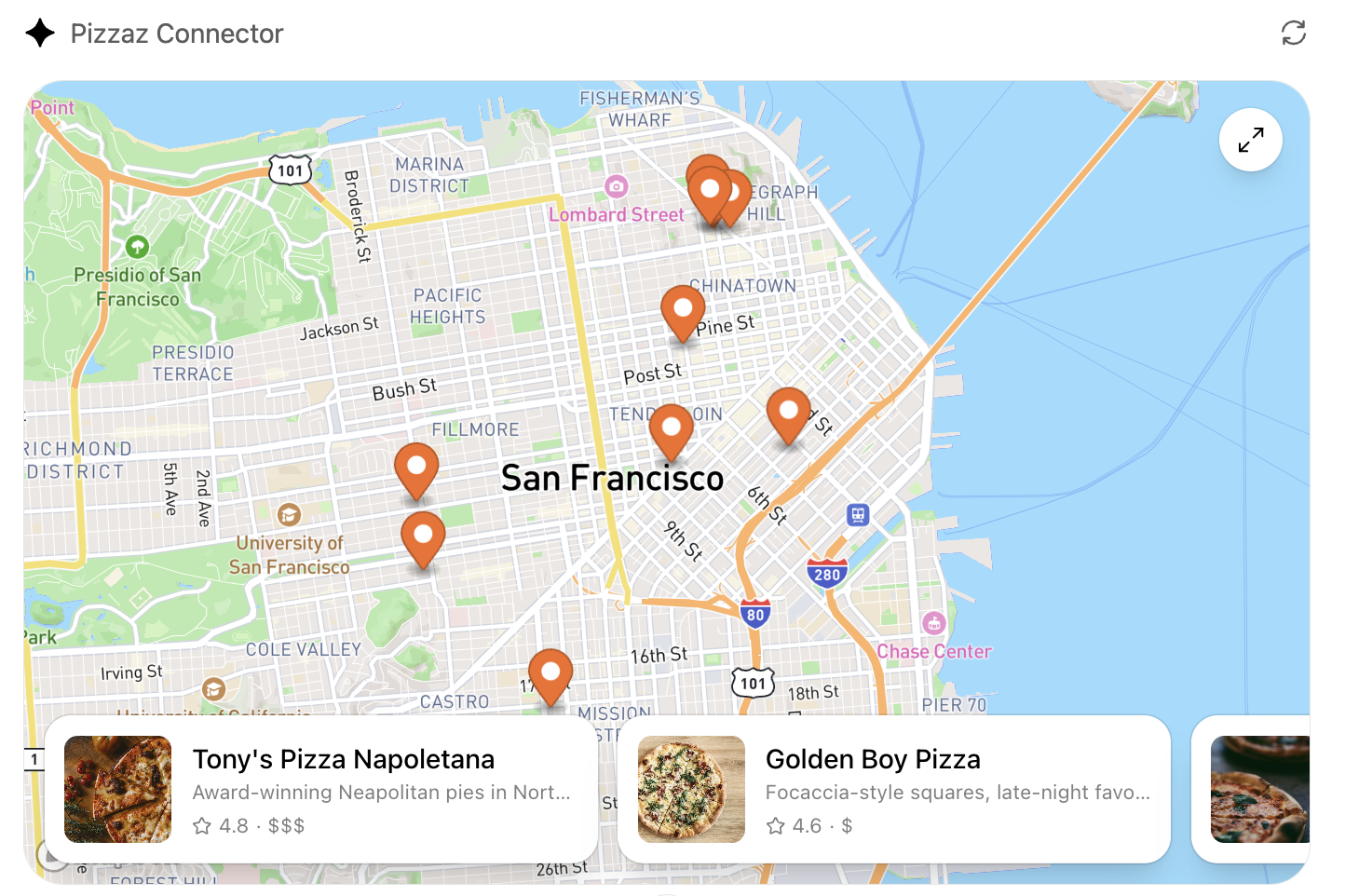

- Apps SDK: This SDK enables apps to be directly integrated into ChatGPT’s main web (or mobile) UI. Two aspects I want to highlight here are the direct integration of apps into the ChatGPT UI, which enables a seamless experience between chat/reasoning and app-specific interaction and customizable UI elements or widgets as they OpenAI called them (such as maps). The other aspect is the power of MCP extensions that Codex and chat can take advantage of to, for example, take control of physical devices like onstage lighting, cameras, and audio systems. The Codex SDK took this even further where the Codex agent was directly integrated into a mobile fitness tracker app such that the app could “self-evolve” and be changed on-the-fly (triggered by a user prompt to add more trend plots for example)

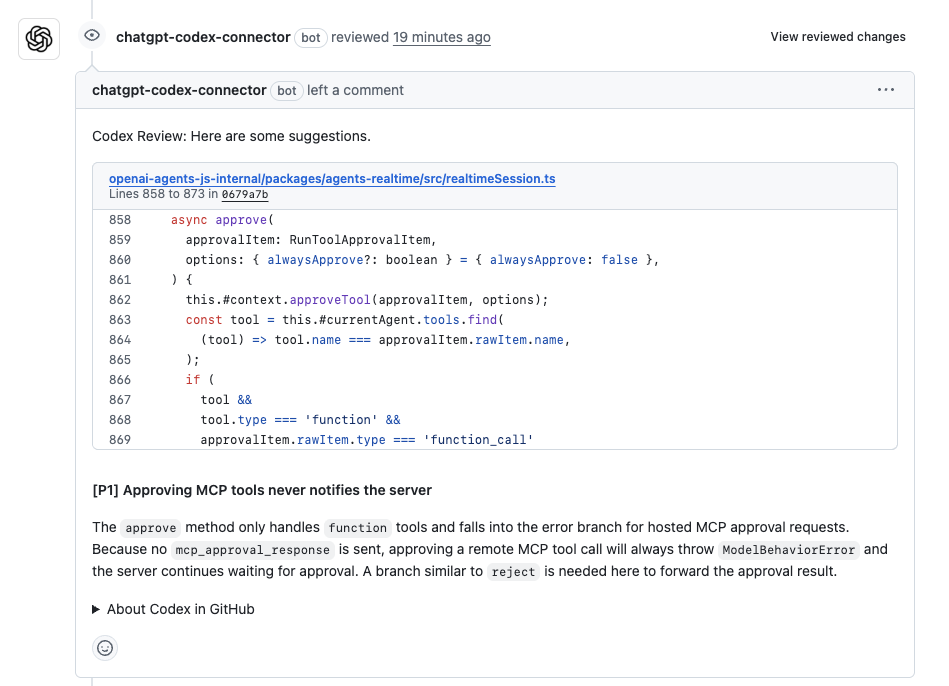

- Codex everywhere - in CLI, IDE, and web. Not just for writing code but also for powerful code review capabilities. I’ve seen before how automated code review can introduce a lot of noise with overzealous commenting. Codex review, on the other hand, focuses on providing fewer but higher signal comments. These are supposedly based on Codex writing tests and scripts to “pen test” the PR under review in a sense. In a sense, Codex is writing the code while another Codex instance (with a separate context) is reviewing it. Definitely something I am excited to try out to address the code review bottleneck. Code review was shown via the Codex CLI (via ) but also the Github web UI (see the Code review setup guide).

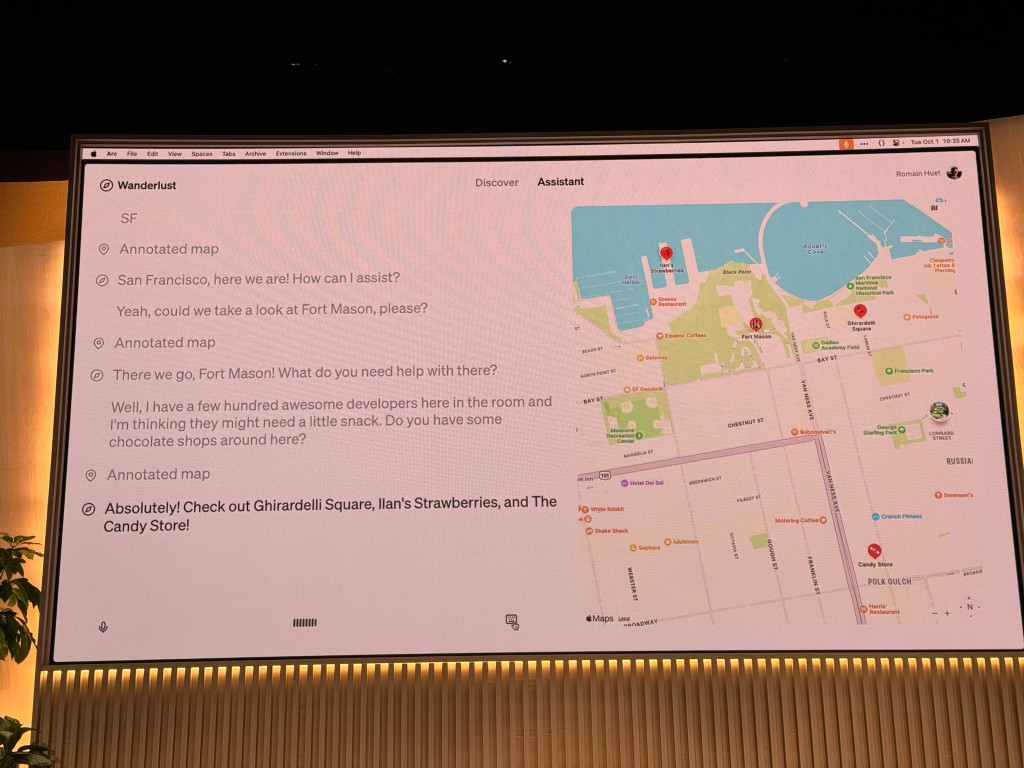

- Codex with Figma MCP integration and also Chrome dev-tools MCP for performance optimization. I enjoyed this demo quite a bit too for the main reason that it almost entirely automated the web-app development - from designs in Figma, to MVP implementation, to performance tuning via the Chrome dev tools MCP server. What’s more, Codex can take screenshots via Playwright to verify that the implementation stays faithful to the original design. A highly practical demonstration of agentic workflows and the reasoning and tool calling power of gpt-5-codex. The demo app “Wanderlust” - an app that proposes travel itineraries in a sleek web UI - has been recreated in this (unofficial?) repo. See the photo below (borrowed from Simon Willison’s blog)

- gpt-oss: My main takeaway of this talk was the impressive possibilities of running powerful reasoning and agentic models directly on-device. Even the 120B version of the model can run on consumer hardware like a MacBook (via Ollama). This is not unique to OpenAI’s GPT gpt-oss but it was nonetheless impressive to see a demo using tool calling (including outsourcing web UI development to the more capable but cloud-based gpt-5). I’ll definitely be trying out gpt-oss for on device applications. The only thing I wish gpt-oss supported was multimodal (in particular image) inputs.

- Last but not least, the conversation between Sam and Jony Ive was refreshing to listen to. No big product reveals but they did hint at a “product family” and that they have 15-20 promising ideas. That leaves much to speculation as to what the supposed wearable is going to be.

OpenAI covered a lot of ground during the dev day and any of the topics above warrants more in-depth experimentation and discussions - stay tuned for those in follow-up blog posts.

Stay curious!

Daniel